1. ServiceAccount Cluster Role Binding

Context -

You have been asked to create a new ClusterRole for a deployment pipeline and bind it to a specific ServiceAccount scoped to a specific namespace.

Task -

Create a new ClusterRole named deployment-clusterrole, which only allows to create the following resource types:

✑ Deployment

✑ Stateful Set

✑ DaemonSet

Create a new ServiceAccount named cicd-token in the existing namespace app-team1.

Bind the new ClusterRole deployment-clusterrole to the new ServiceAccount cicd-token, limited to the namespace app-team1.

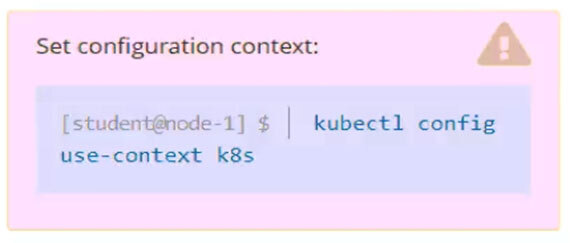

kubectl config current-context

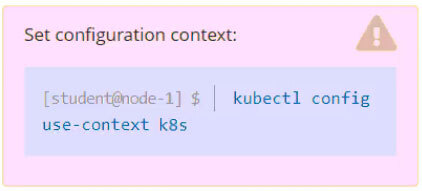

kubectl config use-context k8s

1.serviceaccount

kubectl create serviceaccount cicd-token -n app-team1

kubectl get sa -n app-team1

2. clusterrole

kubectl create clusterrole deployment-clusterrole --verb=create --resource=deployment,statefulset,daemonset

kubectl get clusterrole deployment-clusterrole

3. clusterolebinding

kubectl create clusterolebinding deployment-clusterrole-binding --clusterrole=deployment-clusterrole --serviceaccount=app-team1:cicd-token --namespace=app-team1

Set the node named ek8s-node-0 as unavailable and reschedule all the pods running on it.

k get nodes

k drain ek8s-node-0

k uncordon ek8s-node-0

k get nodes

3. Upgrade

Given an existing Kubernetes cluster running version 1.22.1, upgrade all of the Kubernetes control plane and node components on the master node only to version 1.22.2.

Be sure to drain the master node before upgrading it and uncordon it after the upgrade.

You are also expected to upgrade kubelet and kubectl on the master node.

1. 검색 upgrade

2. Determine, upgrading kubeadm, apply

3. 콘솔: drain node

4. upgrading kubelet kubectl

5. 콘솔: uncordon

6. 확인 get nodes

4. Back up, Restore

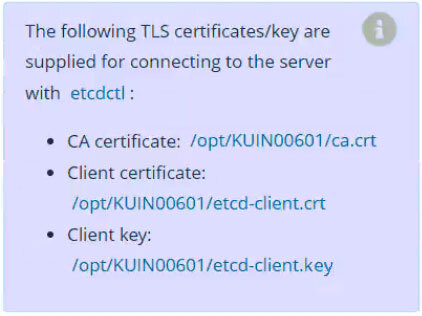

Task -

First, create a snapshot of the existing etcd instance running at https://127.0.0.1:2379, saving the snapshot to /var/lib/backup/etcd-snapshot.db.

Next, restore an existing, previous snapshot located at /var/lib/backup/etcd-snapshot-previous.db.

주의 : 리스토어시 --data-dir=/var/lib/etcd-new 랑 vi /etc/kubernetes/manifests/etcd.yaml path: /var/lib/etcd-new 랑 같아야 함

기억 : ETCDCTL_API=3 etcdctl --data-dir=/var/lib/etcd-new snapshot restore /data/etcd-snapshot-previous.db

k config current-context

k config use-context k8s

k config use-context hk8s

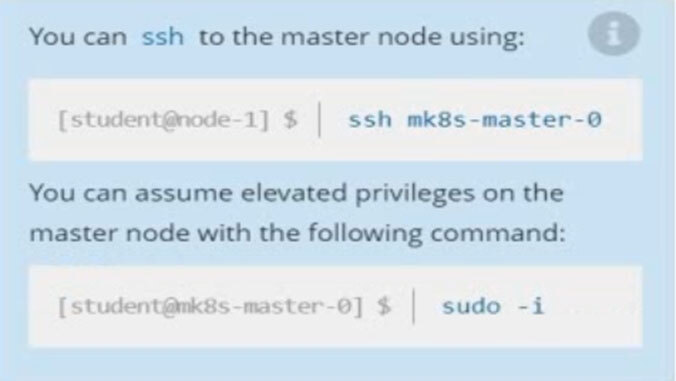

ssh k8s-master

sudo -i

ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key \

snapshot save /data/etcd-snapshot.db

ls -l /data/etcd-snapshot.db

-rw-------. 1 root root 4861984 Dec 24 11:52 /data/etcd-snapshot.db

# restore

ls -al /data/etcd-snapshot-previous.db

ETCDCTL_API=3 etcdctl --data-dir=/var/lib/etcd-new snapshot restore /data/etcd-snapshot-previous.db

ls -al /data/etcd-new

vi /etc/kubernetes/manifests/etcd.yaml

- etcd 경로바꾸기

- hostPath:

path: /var/lib/etcd-new

type: DirectoryOrCreate

name: etcd-data

docker ps -a | grep etcd

5.

SIMULATION -

Task -

Create a new NetworkPolicy named allow-port-from-namespace in the existing namespace fubar.

Ensure that the new NetworkPolicy allows Pods in namespace internal to connect to port 9000 of Pods in namespace fubar.

Further ensure that the new NetworkPolicy:

✑ does not allow access to Pods, which don't listen on port 9000

✑ does not allow access from Pods, which are not in namespace internal

이거일듯

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-port-from-namespace

namespace: <ns>

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

team: <label>

ports:

- protocol: TCP

port: 80SIMULATION -

Task -

Reconfigure the existing deployment front-end and add a port specification named http exposing port 80/tcp of the existing container nginx.

Create a new service named front-end-svc exposing the container port http. Configure the new service to also expose the individual Pods via a NodePort on the nodes on which they are scheduled.

kubectl get deploy front-end -o yaml > front-end.yaml

kubectl delete deployments.apps front-end

vi front-end-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: front-end-svc

spec:

type: NodePort

selector:

run: nginx

ports:

- name: http

protocol: TCP

port: 80

targetPort: http

---

apiVersion: v1

kind: Service

metadata:

name: front-end-svc

spec:

type: NodePort

ports:

- name: http

protocol: TCP

port: 80

targetPort: http

kubectl apply -f front-end-svc.yaml

kubectl get deploy,svc7. deployment scale out

Task -

Scale the deployment presentation to 3 pods.

k get deploy presentation

k scale deploy presentation --replicas=3

k get pods

8. nodeselector

참고 링크: Assign Pods to Nodes

Task -

Schedule a pod as follows:

✑ Name: nginx-kusc00401

✑ Image: nginx

✑ Node selector: disk=ssd

k run nginx-kusc00401 —image=nginx —dry-run=client -o yaml > nginx-kusc00401.yaml

vi nginx-kusc00401.yaml → nodeselector만 복붙

k apply -f nginx-kusc00401.yamlSIMULATION -

Task -

Check to see how many nodes are ready (not including nodes tainted NoSchedule) and write the number to /opt/KUSC00402/kusc00402.txt.

k get nodes | grep -i -w ready

ready인 노드에 한해서

k describe node 노드명 | grep -i NoSchedule

-> 여기서 뜨면 제외

SIMULATION -

Task -

Schedule a Pod as follows:

✑ Name: kucc8

✑ App Containers: 2

✑ Container Name/Images:

- nginx

- consul

k run nginx --image=nginx --dry-run=client -o yaml > kucc8.yaml

수정

apply -f

SIMULATION -

Task -

Create a persistent volume with name app-data, of capacity 2Gi and access mode ReadOnlyMany. The type of volume is hostPath and its location is /srv/app- data.

SIMULATION -

Task -

Monitor the logs of pod foo and:

✑ Extract log lines corresponding to error file-not-found

✑ Write them to /opt/KUTR00101/foo

k get pod foo

k logs foo | grep 'file-not-found' > /opt/KUTR00101/foo

cat /opt/KUTR00101/foo

SIMULATION -

Context -

An existing Pod needs to be integrated into the Kubernetes built-in logging architecture (e.g. kubectl logs). Adding a streaming sidecar container is a good and common way to accomplish this requirement.

Task -

Add a sidecar container named sidecar, using the busybox image, to the existing Pod big-corp-app. The new sidecar container has to run the following command:

Use a Volume, mounted at /var/log, to make the log file big-corp-app.log available to the sidecar container.

SIMULATION -

Task -

From the pod label name=overloaded-cpu, find pods running high CPU workloads and write the name of the pod consuming most CPU to the file /opt/

KUTR00401/KUTR00401.txt (which already exists).

SIMULATION -

Task -

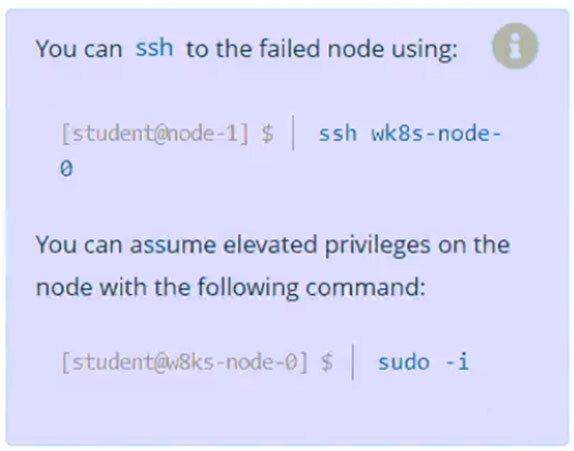

A Kubernetes worker node, named wk8s-node-0 is in state NotReady.

Investigate why this is the case, and perform any appropriate steps to bring the node to a Ready state, ensuring that any changes are made permanent.

k get nodes

ssh / sudo -i -> status kubelet -> restart enable --now -> status

16. SIMULATION -

Task -

Schedule a pod as follows:

✑ Name: nginx-kusc00401

✑ Image: nginx

✑ Node selector: disk=ssd

16.

SIMULATION -

Task -

Create a new NetworkPolicy named allow-port-from-namespace in the existing namespace fubar.

Ensure that the new NetworkPolicy allows Pods in namespace internal to connect to port 9000 of Pods in namespace fubar.

Further ensure that the new NetworkPolicy:

✑ does not allow access to Pods, which don't listen on port 9000

✑ does not allow access from Pods, which are not in namespace internal

SIMULATION -

Task -

Create a new nginx Ingress resource as follows:

✑ Name: pong

✑ Namespace: ing-internal

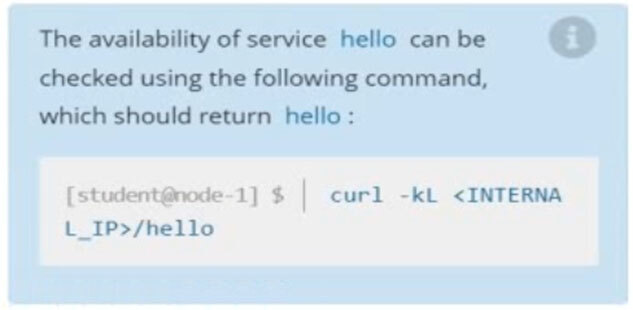

✑ Exposing service hello on path /hello using service port 5678

1. 인그래스 검색

2. 수정 후 apply

3. kubectl get ingress pong -n ing-internal

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: pong

namespace: ing-internal

spec:

ingressClassName: nginx-example

rules:

- http:

paths:

- path: /hello

pathType: Prefix

backend:

service:

name: hello

port:

number: 5678

'OPS' 카테고리의 다른 글

| 2023-02-04 DOP 공부 #3 (0) | 2023.02.04 |

|---|---|

| 2023-02-03 DOP 공부 #2 (0) | 2023.02.03 |

| Pycham을 이용해 AWS 인스턴스 만들기 (0) | 2023.01.12 |

| [EC2] 연결성 오류 검사 에러 (0) | 2023.01.09 |

| [EFS] EFS 마운트 (0) | 2023.01.04 |